We explore how one of the most anticipated AI based tools work.

Source: GitHub Copilot

Source: GitHub Copilot

Recently, Github and OpenAI released one of the most anticipated AI-based tools for developers — Github Copilot. The Artificial Intelligence (AI) tool is advertised as a pair programming assistant that does much more than traditional code autocomplete tools out there. By no means is Copilot a tool intended to substitute developers in any way. Instead, the tool is meant to be used as an assistant that can facilitate many of the “boring” and “repetitive” parts of programming and lets coders worry about parts of the process that require human thinking and reasoning.

It is important to note that GitHub Copilot is based on a recent deep learning model published by OpenAI in a paper called “Evaluating Large Language Models Trained on Code”. This research paper introduces Codex, a GPT-3 derivative model fine-tuned on publicly available code from GitHub.

Code generation tools, like Copilot, have the potential to help millions of developers around the world. It can significantly speed up the development cycle by providing recipes of standard boilerplate code that is often re-written. It may help developers when dealing with new programming languages. It can improve code reliability by suggesting tests that match the code implementation and much more.

However, since Copilot is based on large language models (LLMs) that are trained on very large corpora, it is not akin to problems that similar LLMs have shown. In this piece, we are going to go over some of the main characteristics of GitHub Copilot. We start by looking at Codex, the deep learning model that powers the GitHub tool, we talk about the advantages of Copilot, and how it was trained. Then, we discuss some of the concerns regarding the system such as Copyright. If you already have used auto-complete tools such Tabnine, you already have tasted a fraction of what Copilot can do. However, as we are going to see, Copilot goes much more beyond!

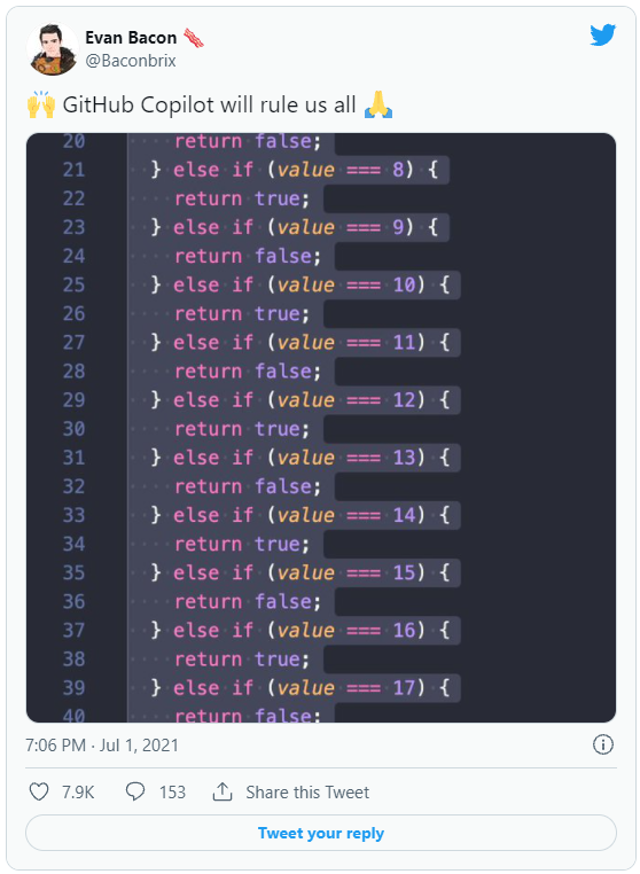

Copilot, in its current state, is not meant to substitute programmers at any level. Indeed, as you can see, sometimes its solutions are not the best.

Large Language Models and Codex

The recent success of large language models (LLMs) like GPT and BERT relies on three stepping stones.

- The advances in Neural Network architectures such as the Transformer

- The availability of publicly large text databases such as Wikipedia (which alone contains 3 billion tokens)

- The advances in high-performance computing using specialized hardware such as the GPU.

These are the foundations that allowed deep neural networks to revolutionize NLP applications in the last decade. Most specifically, the availability of large codebases such as GitHub and GitLab also allowed the same set of methods to show advances in one of the most challenging NLP applications — code synthesis.

Codex is a specialized GPT model that is trained on publicly available code and designed to produce computer code from a given context. One clear example is to produce a Python function from a description written in natural language called a docstring.

To understand how Codex works, we need to touch on Generative Pre-trained Transformer (GPT), a family of language models developed by OpenAI.

To start, a language model is simply a machine learning algorithm that learns how to generate text by optimizing a simple task called next word prediction. Here, the idea is to learn the probability distribution of a given token from its context. Some examples include: predicting the next character of a word from the preceding ones or predicting the next word of a sentence given the context.

GPT is an attempt to study how probabilistic language models behave in large-scale environments. To contextualize, GPT-3, the most recent model from the family, was trained using 5 of the largest NLP datasets available on the internet: Common Crawl, WebText2, Books1, Books2, and Wikipedia. In terms of capacity, GPT-3 is one of the most parametric heavy machine learning model to date. Its largest version contains 175 Billion learnable parameters. To have an idea, the largest language model before GPT-3, Microsoft’s Turing Natural Language Generation (T-NLG), has 17 Billion learnable parameters.

GTP-3 learns deep correlations from human texts by predicting which word comes next, given a few words as input. You can easily relate this task with the ability to perform autocomplete that most smart phone keyboards have.

“As you type, you can see choices for words and phrases you’d probably type next, based on your past conversations, writing style, and even websites you visit”. Source Apple support webpage.

Most importantly, these correlations create a deep internal text representation that allows GPT to learn various tasks without the necessity to retrain the system from scratch. Such tasks include Question Answering, Translation, Common Sense Reasoning, Reading Comprehension, and more. For instance, in Question Answering, the GPT model is assessed on its ability to answer questions about broad factual knowledge. You can check an example of a pair (question-answer) extracted from one of the datasets used to evaluate the GPT-3 model.

Question: The Dodecanese Campaign of WWII that was an attempt by the Allied forces to capture islands in the Aegean Sea was the inspiration for which acclaimed 1961 commando film?

Answer: The Guns of Navarone

Back to Copilot. To understand how efficient Copilot is at writing code, we need to understand how Codex’s performance was evaluated. In the original paper, OpenAI researchers developed an evaluation protocol for the task of generating Python functions from docstrings called HumanEval: Hand-Written Evaluation Set. These functions are evaluated for correctness using a metric called pass@k. Here, the system is allowed to generate k candidate solutions for a specific problem. If any of the candidates pass the unit tests for a specific question, that problem is considered solved.

The evaluation or test database contains 164 hand-written programming problems with corresponding unit tests. Each function contains a signature, a docstring, a body, and an average of 7.7 unit tests.

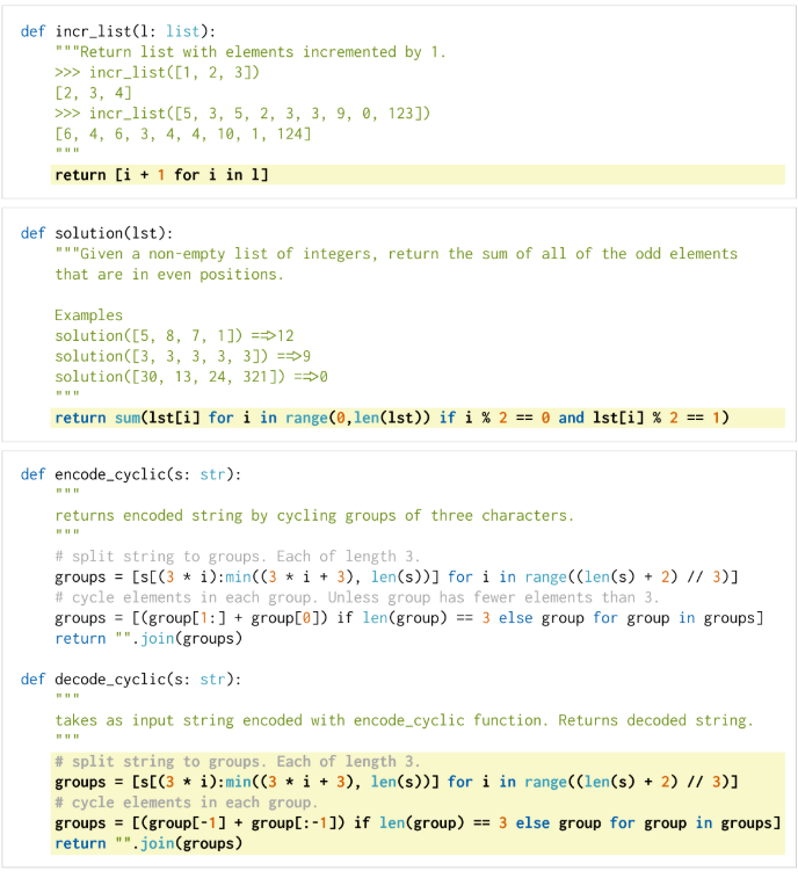

HumanEval evaluation problems assess language comprehension, algorithms, and simple mathematics, with some comparable to simple software interview questions. In this scenario, to solve a specific test, the model is allowed to generated multiple samples, and if any of them passes the unit test, the test is considered solved. You can see some example functions in the Image below. For evaluation, Codex receives the context (in white) containing the function header and docstring, and generates the solution (in yellow). Note that, for the decode_cyclic(.) function, Codex receives as input the encoding function.

Some example problems from the HumanEval dataset. Source: Evaluating Large Language Models Trained on Code arXiv:2107.03374v2.

Some example problems from the HumanEval dataset. Source: Evaluating Large Language Models Trained on Code arXiv:2107.03374v2.

To have a clear idea, if Codex is allowed to generate a single candidate program (pass@1), it can produce correct functions for 28.8% of the 164 programming problems in HumanEval. However, if Codex is allowed to generate 100 candidate solutions (pass@100) per problem, it can generate correct functions for 77.5% of the problems in the benchmark.

Like GPT-3, Codex is also trained on a very large dataset. According to the original Codex paper, the training data was collected in May 2020 from 54 million public software repositories hosted on GitHub. From these repositories, they managed to extract 179 GB of unique Python files under 1 MB. These files were passed through a filtering stage to remove auto-generated code and to limit the average line length, resulting in a final dataset of size 159 GB.

It is clear that, although GitHub Copilot brings a lot of enthusiasm to the developing community, it is not a tool that can, in its actual state, substitute developers in any sense. Moreover, even as a code pair programming assistant, trusting too much the code produced by Copilot can give you some headaches. Codex research paper shows some of the vulnerabilities of the system. Due to its current accuracy, it does fail to deliver correct solutions for more complex problems, thus, trusting too much on the code produced by Copilot might not be ideal in all situations.

In the security aspect, the launch of GitHub Copilot has sparked some very important debates. One of the most important relates to licensing. In short, a license describes to others what they can and cannot do with the code you wrote. We all know that most of the code available on online platforms such as GitHub falls under some type of licensing.

You’re under no obligation to choose a license. However, without a license, the default copyright laws apply, meaning that you retain all rights to your source code and no one may reproduce, distribute, or create derivative works from your work.

OpenAI and GitHub state that the system that powers Copilot was trained on public software repositories hosted on GitHub. And here are the main concerns: how can GitHub take all public code available in their platform and build a system that will be used commercially?

How about all the repositories that have GPL-based licenses? It might be a problem because GPL licenses are treated as viral. In other words,

You may copy, distribute and modify the software as long as you track changes/dates in source files. Any modifications to or software including (via compiler) GPL-licensed code must also be made available under the GPL along with build & install instructions — LGPL-V2.

and

This license is mainly applied to libraries. You may copy, distribute and modify the software provided that modifications are described and licensed for free under LGPL. Derivatives works (including modifications or anything statically linked to the library) can only be redistributed under LGPL, but applications that use the library don’t have to be — LGPL-V3.

So, the question that many people are asking is: should the code produced by a language model, that is trained on GPL licensed code, also be distributed as GPL? Put differently, is the code produced by Copilot a derivative work of the training data it used? These are open questions that may probably spark legal implications.

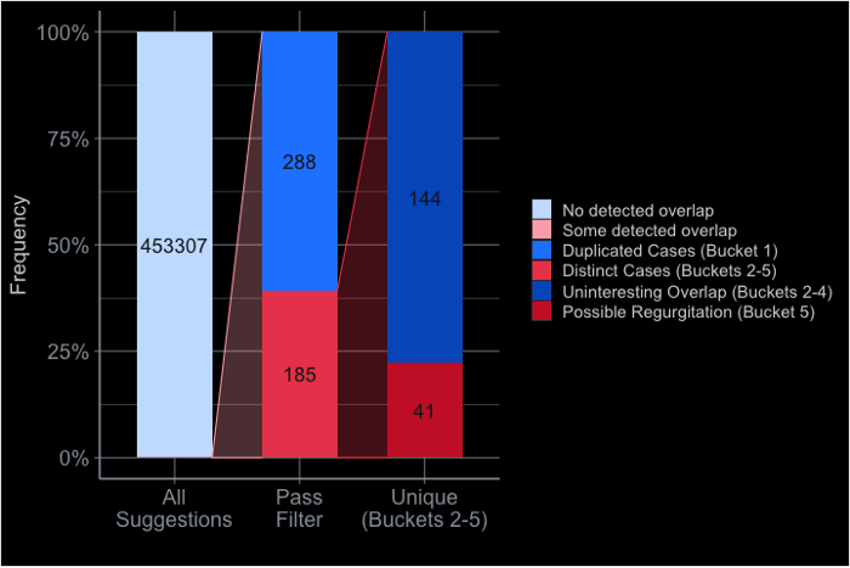

Copilot has been reported to output personal information such as API keys. This is a great concern and also opens a debate regarding how much these large language models do memorize the training data instead of generating novel content. In fact, GitHub conducted a study in which they report that only 0,1% of the output produced by Copilot can be considered reproductions from the training set. Check Research recitation, for more details.

Source: Research recitation GitHub.

Source: Research recitation GitHub.

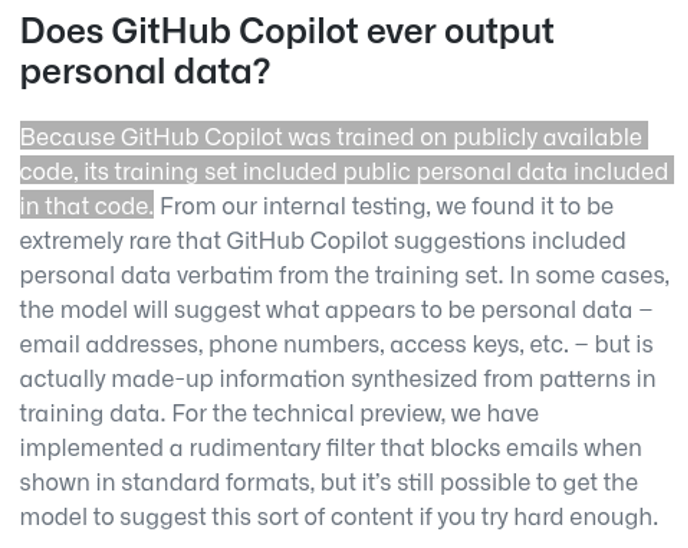

Regarding personal data, the GitHub Copilot webpage warns users of this possibility. The personal information that Copilot might output is a direct consequence of the training data used to build the system. Since Codex learns from millions of public code sources, it is easy to imagine that many of these repositories don’t follow security guidelines and end up leaving personal data such as passwords and API keys not protected.

Source: GitHub Copilot

Source: GitHub Copilot

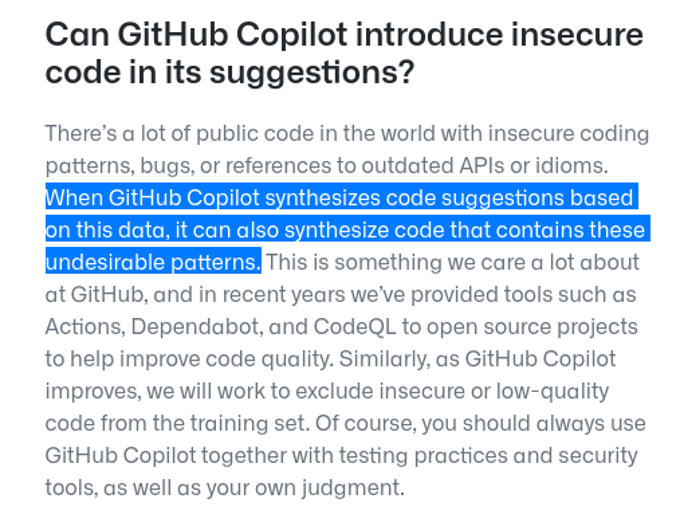

In fact, training a code synthesizing deep neural network on a large corpus of publicly available code also suggests how good Copilot might be at writing high-quality code. Neural Networks are data-hungry correlation machines. In this sense, if we assume that most of the repositories used for training Codex, were not written by experienced programmers, who care about good quality code and follow strict security guidelines and effective code patterns, Copilot may be susceptible to producing not secure and poor written code as well.

Source: GitHub Copilot.

Source: GitHub Copilot.

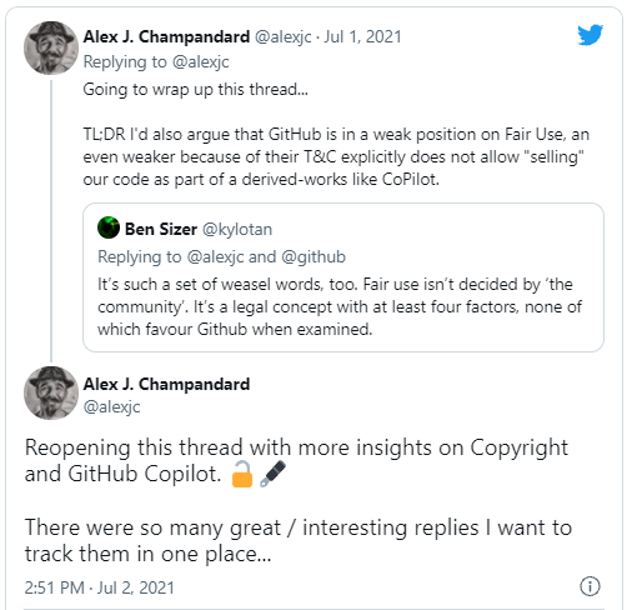

To conclude, this useful Twitter thread expands on many potential problems relating Copyright and Copilot.

Source: Twitter

Key Takeaways

To summarize, GitHub Copilot is an undoubtedly breakthrough application of deep Neural Networks. Seeing it in action looks like a magic show and even though it is not a perfect system, we can only expect it to improve over time. Nevertheless, the benefits of Copilot, even in its BETA stage, are all over the place. However, relying too much on its output, as a black magic box, might give some unexpected extra work. In a sense, Copilot aims to change the programming landscape. With the advancements of such tools, programmers will be free to spend more time on problems that require human reasoning while parts of the development process that relied on standard boilerplate and memorized solutions will be automated.

About Encora

Fast-growing tech companies partner with Encora to outsource product development and drive growth. Contact us to learn more about our software engineering.